I previously blogged about setting up, monitoring and using ConfigMaps for a Cardano relay using Kubernetes. This post describes the changes to also run a Producer node, which connects to the relay and uses Kubernetes secrets for its keys.

Posts in this series:

- Step 1: Setting up Cardano Relays using Kubernetes/microk8s

- Step 2: Monitoring Cardano Relays on Kubernetes with Grafana and Prometheus

- Step 3: Using Kubernetes ConfigMaps for Cardano Node Topology Config

- Step 4: Setting up a Cardano Producer node using Kubernetes/microk8s

If you find this post useful or are looking for somewhere to delegate while setting up your own pool, check out my pool [CODER]! 😀

Creating and Registering a Pool and Cold Keys

This post assumes you have already set up your pool and create the required keys and focuses only on setting up the Producer node in Kubernetes. If you do not already have your pool set up you should follow the official Cardano docs.

Be sure to use an offline machine for the creation of the cold keys and ensure you keep secure backups. If you have a significant pledge I would consider using a Crypto Hardware wallet to secure it (and for the rewards address) instead of the wallet on the cold machine (ensure you have good security/backups of the hardware seed phrase!). This AdaLite post has some good instructions for how to include a hardware wallet as a pool owner. Doing this will reduce the loss if your cold machine was lost/compromised to just the deposit and future rewards rather than also existing unclaimed rewards and the pledge.

Creating Kubernetes Secrets

After following the pool creation instructions above, you should have three files from your cold environment that your Producer node will need:

- kes.skey

- vrf.skey

- node.cert

No other files from the cold machine should be used on the producer (although they should be safely backed up from the cold machine).

These three files will be stored in Kubernetes as Secrets. The default is for Secrets to be stored base64 encoded in Kubernetes. This is not encryption - it is simply to support binary files. For simplicity this post will stick with base64 but you should consider enabling encryption for Secrets and ensure your config files are secure and cannot be read by anything that does not need them.

If these three files are compromised, it does not give up control of your pool, your deposit or your pledge. However it would allow someone else to run a producer for your pool (which could result in short-lived forks that negatively impact the network) or calculate which slots your pool will produce (making a DoS attack a little easier).

DO NOT use online web pages for base64 encoding Kubernetes secrets!

To base64 encode the contents of a file, you can use the base64 command:

$ echo 'this is a test' > testfile.txt

$ base64 -i testfile.txt

dGhpcyBpcyBhIHRlc3QK

You can verify the contents using base64 -d

$ echo "dGhpcyBpcyBhIHRlc3QK" | base64 -d

this is a test

These values can then be added to a new Kubernetes config file along with the rest of your config using the names we’d like to use as filenames when mounting these into the pod as the keys:

apiVersion: v1

kind: Secret

metadata:

name: mainnet-producer-keys

data:

kes.skey: ewogXXXXXXXXXXXXXXXXXXXXXXXX== # replace with base64 encoded version of this file

vrf.skey: ICJ0XXXXXXXXXXXXXXXXXXXXXXX== # replace with base64 encoded version of this file

node.cert: eXBXXXXXXXXXXXXXXXXXXXXXXXX== # replace with base64 encoded version of this file

Node Volume

Extend the node volumes config created for the relays to include a similar definition for the producer. The producer will need its own data folder, though to speed up the initial sync you may wish to copy the db folder in from an existing relay. You’ll also want to copy the configuration folder since that will likely be the same for each node now we’re using ConfigMaps for topology.

If you’re using a local node folder for storage, you should again set nodeAffinity to ensure this pod always runs on the same node (bear in mind this means the pod won’t run if this node is unavailable, but redundant storage is outside of the scope of this post).

apiVersion: v1

kind: PersistentVolume

metadata:

name: cardano-mainnet-producer-data-pv-1

spec:

capacity:

storage: 25Gi

accessModes:

- ReadWriteOnce

storageClassName: local-storage-cardano-mainnet-producer

# This volume is on the host named "mario"

local:

path: /home/danny/cardano/producer-mainnet/data

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

# Restrict this volume to a specific Kubernetes node by hostname

- key: kubernetes.io/hostname

operator: In

values:

- mario # hostname for this volume

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: local-storage-cardano-mainnet-producer

provisioner: kubernetes.io/no-provisioner

# https://kubernetes.io/docs/concepts/storage/storage-classes/#local

volumeBindingMode: WaitForFirstConsumer

Topology

The previously created topology ConfigMap file needs updating to include config for the producer, and also to point the relay to the producer.

I’ve used the hostnames cardano-mainnet-relay-service.default.svc.cluster.local and cardano-mainnet-producer-service.default.svc.cluster.local in my config files, which Kubernetes DNS automatically resolves to the IP addresses of the services created for the relays/producer respectively. This avoids needing to hardcode specific IP addresses when first setting this up.

I’ve omitted my other peers and used just the default IOHK hostname to keep the example shorter.

apiVersion: v1

kind: ConfigMap

metadata:

name: mainnet-producer-topology

data:

topology.json: |

{

"Producers": [

{

"addr": "cardano-mainnet-relay-service.default.svc.cluster.local",

"port": 30801,

"valency": 1

}

]

}

---

apiVersion: v1

kind: ConfigMap

metadata:

name: mainnet-relay-topology

data:

topology.json: |

{

"Producers": [

{

"addr": "cardano-mainnet-producer-service.default.svc.cluster.local",

"port": 30800,

"valency": 1

},

{

"addr": "relays-new.cardano-mainnet.iohk.io",

"port": 3001,

"valency": 2

}

]

}

StatefulSet/Pod Definition

The configuration for the producer is mostly the same as for the relay, with a few differences:

- Names and volumes are updated to reference “producer” instead of “relay”

- A new volume is used to mount the secrets (keys) into the pod

- Additional arguments are passed to the node containing paths to the keys

- The exposed service does not bind a

NodePortbecause incoming connections are only from inside the cluster (the relays)

My full config looks like this:

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: cardano-mainnet-producer-deployment

labels:

app: cardano-mainnet-producer-deployment

spec:

serviceName: cardano-mainnet-producer

replicas: 1

selector:

matchLabels:

app: cardano-mainnet-node

cardano-mainnet-node-type: producer

template:

metadata:

labels:

app: cardano-mainnet-node

cardano-mainnet-node-type: producer

spec:

containers:

- name: cardano-mainnet-producer

image: inputoutput/cardano-node

imagePullPolicy: Always

####### Additional arguments for keys

args: ["run", "--config", "/data/configuration/mainnet-config.json", "--topology", "/topology/topology.json", "--database-path", "/data/db", "--socket-path", "/data/node.socket", "--port", "4000", "--shelley-kes-key", "/keys/kes.skey", "--shelley-vrf-key", "/keys/vrf.skey", "--shelley-operational-certificate", "/keys/node.cert"]

ports:

- containerPort: 12798

- containerPort: 4000

volumeMounts:

- name: data

mountPath: /data

- name: topology

mountPath: /topology

####### Additional volume mount for keys

- name: keys

mountPath: /keys

readOnly: true

volumes:

- name: topology

configMap:

name: mainnet-producer-topology

####### Additional volume for keys

- name: keys

secret:

secretName: mainnet-producer-keys

defaultMode: 0400

volumeClaimTemplates:

- metadata:

name: data

spec:

accessModes:

- ReadWriteOnce

storageClassName: local-storage-cardano-mainnet-producer

resources:

requests:

storage: 25Gi

---

apiVersion: v1

kind: Service

metadata:

name: cardano-mainnet-producer-service

spec:

type: NodePort

selector:

app: cardano-mainnet-node

cardano-mainnet-node-type: producer

ports:

- port: 30800

targetPort: 4000

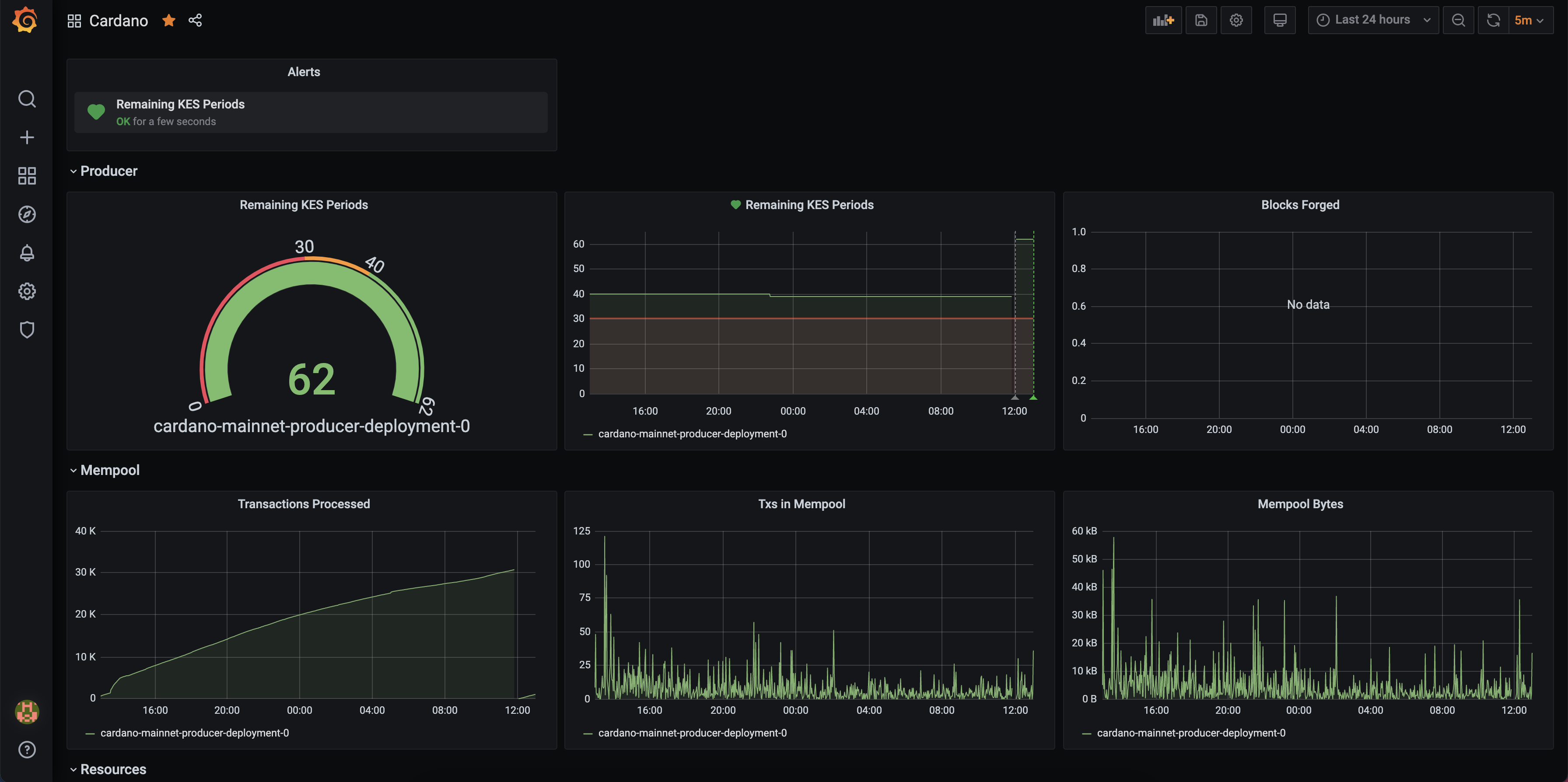

With all of these files kubectl apply -f‘d, the producer node should start up and being syncing. Since it’s tagged with cardano-mainnet-node, it should automatically be picked up by the Prometheus config we previously set up and show in Grafana when it’s fully running.

I created a bash alias that allows me to quickly print the last few blocks from each node so I can quickly verify they’re in-sync and the latency of them syncing blocks:

alias print-block-sync='kubectl logs pod/cardano-mainnet-relay-deployment-0 | grep extended | tail -n 5 | sed "s/cardano-:cardano.node.ChainDB:Notice/Relay/" && \

kubectl logs pod/cardano-mainnet-producer-deployment-0 | grep extended | tail -n 5 | sed "s/cardano-:cardano.node.ChainDB:Notice/Producer/"'

$ print-block-sync

[Relay 11:52:36.22] new tip: 92cb0868c150fa16602082b0aec3bc8d57a2edc5bbd5b3fe9ed7df401342e62b at slot 27785265

[Relay 11:52:47.38] new tip: af5dedd7f5d0fd77581e14c7b50cb5c49005b801ff6e99522ed6b18f41f60230 at slot 27785276

[Relay 11:52:54.37] new tip: d37c64c0dfae9f0dc465ed787719ed54e15e1145476cc296f64835cb6b6ef608 at slot 27785283

[Relay 11:53:12.17] new tip: 3c5a5f0ab9843fb71d2bb83b53ec957e4b82b405c5b11a141e567d1f7ccae6cd at slot 27785301

[Relay 11:53:18.25] new tip: 87b1b0b9a97e236476e9cefe8e7a926b66046a2a93f5aa3d96d5591f42a9e009 at slot 27785307

[Producer 11:52:36.23] new tip: 92cb0868c150fa16602082b0aec3bc8d57a2edc5bbd5b3fe9ed7df401342e62b at slot 27785265

[Producer 11:52:47.39] new tip: af5dedd7f5d0fd77581e14c7b50cb5c49005b801ff6e99522ed6b18f41f60230 at slot 27785276

[Producer 11:52:54.38] new tip: d37c64c0dfae9f0dc465ed787719ed54e15e1145476cc296f64835cb6b6ef608 at slot 27785283

[Producer 11:53:12.18] new tip: 3c5a5f0ab9843fb71d2bb83b53ec957e4b82b405c5b11a141e567d1f7ccae6cd at slot 27785301

[Producer 11:53:18.26] new tip: 87b1b0b9a97e236476e9cefe8e7a926b66046a2a93f5aa3d96d5591f42a9e009 at slot 27785307

I also configured some additional panels and alerts in Grafana to easily monitor the remaining KES periods (it’s import to periodically create new KES keys and copy them - along with an updated node.cert into the secrets file and re-apply them!).

If you find this post useful or are looking for somewhere to delegate while setting up your own pool, check out my pool [CODER]! 😀